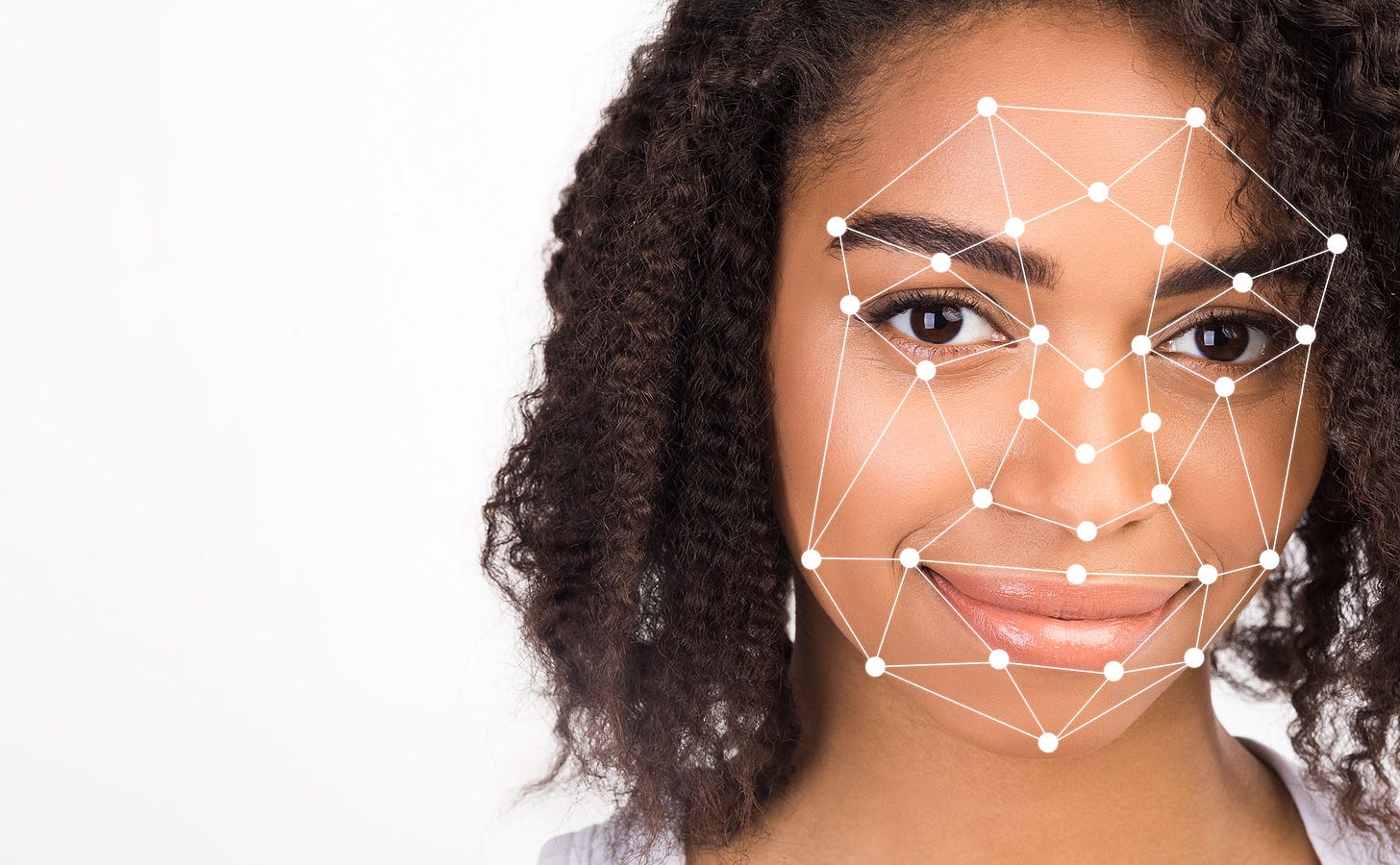

The dangers of facial recognition technology / Humans + Tech - #33

Big technology companies are calling for the regulation of facial recognition technology.

Hi,

I covered facial recognition and its dangers in Issue #13 when the news of Clearview AI came to light.

This week’s news was again dominated by facial recognition and big technology companies ceasing or temporarily halting their development of the technology or collaborations with law enforcement.

On Monday, IBM announced that they are ending all research, development, and production of facial recognition technology [The Washington Post], citing the potential for police to use the technology to violate basic human rights and freedoms.

On Wednesday, Amazon implemented a one-year moratorium on police use of Rekognition [BBC], their facial recognition software. Amazon called for governments to put in place stronger regulations to govern the ethical use of facial recognition technology.

On Thursday, Microsoft announced that they won’t sell facial recognition to police until there are federal laws governing how it can be deployed safely and without infringing on human rights [TechCrunch].

🎩 Hat tip to Akshay for sharing some of the links mentioned in this post. You can connect with Akshay on Twitter or LinkedIn.

Responding to pressure?

It appears that these companies are only folding under pressure from the Black Lives Matter protests against police brutality. Will these actions have been taken without the momentum that these protests have gathered? It seems unlikely.

In April 2019, James Vincent reporting for The Verge said AI researchers from Google, Facebook, Microsoft, and a number of top universities were calling on Amazon to stop selling its facial recognition technology to law enforcement as the technology was flawed, with higher error rates for darker-skinned and female faces. Amazon defended its technology at that time and called the evaluations misleading.

Earlier this year, Microsoft supported a pro-surveillance bill in California to legitimize the use of facial recognition systems by both law enforcement and private companies [ACLU]. And barely a year ago, a Financial Times investigation found that Microsoft had created a database of 10 million faces [Financial Times]. They only removed the dataset after the Financial Times investigation revealed that many people represented in the dataset were unaware and had not consented to their pictures being used.

It is only due to the recent pressure from the BLM activism, where racial discrimination by law enforcement has been highlighted, that they have been forced to reevaluate their stance.

Dangers of facial recognition technology

The temporary moratorium from the big technology companies is not going to be enough to stop the use of this imperfect technology. There are hundreds of small and medium-sized companies like Clearview AI, that develop facial recognition technology and continue to sell their products to both law enforcement and private companies.

Shira Ovide, interviewed Timnit Gebru, a leader of Google’s ethical artificial intelligence team, for her On Tech newsletter [The New York Times] on Tuesday:

Ovide: What are your concerns about facial recognition?

Gebru: I collaborated with Joy Buolamwini at the M.I.T. Media Lab on an analysis that found very high disparities in error rates [in facial identification systems], especially between lighter-skinned men and darker-skinned women. In melanoma screenings, imagine that there’s a detection technology that doesn’t work for people with darker skin.

I also realized that even perfect facial recognition can be misused. I’m a black woman living in the U.S. who has dealt with serious consequences of racism. Facial recognition is being used against the black community. Baltimore police during the Freddie Gray protests used facial recognition to identify protesters by linking images to social media profiles.

A Washington Post article in December 2019 reported that a federal study on facial recognition found that Asian and African American people were up to 100 times more likely to be misidentified than white men.

Lawmakers on Thursday said they were alarmed by the “shocking results” and called on the Trump administration to reassess its plans to expand facial recognition use inside the country and along its borders. Rep. Bennie G. Thompson (D-Miss.), chairman of the Committee on Homeland Security, said the report shows “facial recognition systems are even more unreliable and racially biased than we feared.”

Facial recognition technology in use worldwide

China has the biggest deployment of facial recognition cameras with about 200 million cameras installed nationwide as of last year. It is expected that they will add 400 million more by 2021 [US News].

In Hong Kong, activists are increasingly worried that China is using facial recognition technology to track and identify protesters [Forbes].

AI-powered facial recognition CCTV cameras are being deployed across many countries in Africa as well [iAfrikan Daily Brief].

NEC’s live facial recognition technology is being used in Argentina, India, the UK, and 67 other countries [OneZero]. Live camera feeds are analysed in real-time and compared to photos of wanted individuals. Authorities are immediately alerted to any matches. In Argentina, this led to the wrongful detention of several individuals [OneZero].

Russia is using 100,000 cameras equipped with facial recognition technology to enforce quarantine during coronavirus lockdown [France24].

Visual Capitalist has created an infographic on The State of Facial Recognition Around the World [Visual Capitalist] - It lists the status of facial recognition technology and tools used in countries and regions around the world.

Are you born a criminal?

As if real-time facial recognition being deployed to catch convicted criminals is not enough, a group from Harrisburg University say they have developed automated biometric facial recognition software that can predict criminal behaviour in an individual [BiometricUpdate.com]. They say it can predict criminality from a picture of a face with 80% accuracy and is intended to help law enforcement prevent crime. Completely unacceptable! This means that 1 in 5 people identified will be incorrectly profiled as potential criminals and constantly surveilled as a result. And the other 4 will also be subjected to surveillance based on a prediction that they will commit a crime.

Ban facial recognition technology

From false medical diagnoses to risks of misidentification by law enforcement to the targetting of peaceful protesters, the dangers of imperfect facial recognition technologies touch every aspect of our lives. They pose a danger to society, human rights, and civil liberties, by enabling surveillance states even in democratic countries. There are more drawbacks than advantages to this technology and the only regulation that should be passed should be to ban it completely, in my opinion.

Other interesting articles from around the web

+ 🎥 How to Spot Police Surveillance Tools [Popular Mechanics] - A guide for protesters from Popular Mechanics on how to protect themselves from the tools the police use to track them.

+ 💡 Spies Can Eavesdrop by Watching a Light Bulb's Vibrations [WIRED] - Time to buy some lampshades. Spies can now eavesdrop on your conversations from hundreds of feet away by observing the minuscule vibrations those sounds create on the glass surface of a light bulb inside.

+ 🇪🇺 EU privacy watchdog thinks that Clearview AI is illegal [The Next Web] - Let’s hope this is true. The less mass surveillance there is, the better.

+ 🛑 Facebook pitched a new tool allowing employers to suppress words like unionize in workplace chat product [The Intercept] - I don’t know why Facebook still tries to pretend as if they care about people. They are an enabler of authoritarianism and mass surveillance and a danger to democracy.

Quote of the week

Facial recognition, completely unmonitored, can be used for very bad things. It can be used for stalking, for example.

—Eric Schmidt, former CEO of Google

I wish you privacy and a brilliant day ahead :)

Neeraj